Do gravitational anomalies prove we're not living in a computer simulation?

|

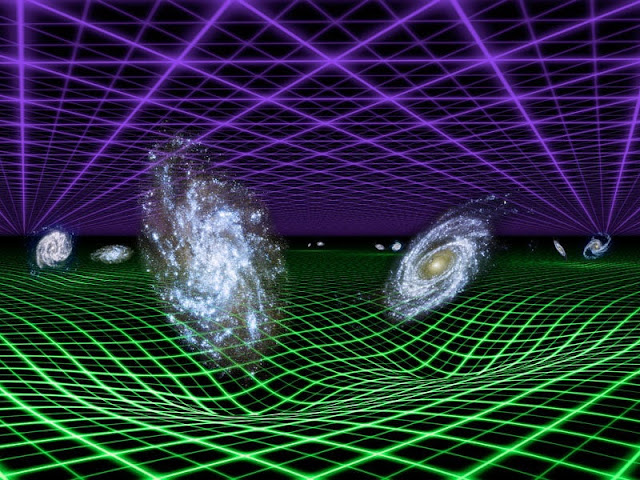

| Researchers from Oxford and the Hebrew University believe they've found proof that the universe is too complex to exist inside a computer simulation(Credit: NASA/JPL-Caltech) |

Is our entire universe just a computer simulation? It sounds like the premise for a sci-fi movie, but over the years the idea has been debated by scientists in earnest. But now theoretical physicists believe they've found proof that our universe is far too complex to be captured in any simulation. According to the researchers, the hypothesis is done in by gravitational anomalies, tiny "twists" in the fabric of spacetime.

For many, the concept that our civilization might exist inside a simulation goes back to the movie The Matrix, but it has actually been discussed in scientific circles as a legitimate possibility, including at the Isaac Asimov Memorial Debate at the American Museum of Natural History last year. Oxford philosopher Nick Bostrom proposed the idea in a 2003 paper, and the general crux of his argument is a bit of a numbers game.

The simulation argument

Essentially, Bostrom suggested that at the rate technology is advancing today, it's likely that future generations will have access to supercomputers beyond our imagining. And since we tend to use computers to run (relatively primitive) simulations with our current technology, those future humans (or another advanced species) would likely do the same, perhaps simulating their ancestors. And with all that extra processing power at their disposal, it follows that they would run many simulations simultaneously.

As a result, the amount of artificial universes would vastly outnumber the one "real" universe, so statistically it's far more likely that we live in one of these simulations. Astrophysicist Neil deGrasse Tyson puts our odds of living in a simulation at 50/50, while Elon Musk is far less optimistic, saying the chance is "one in billions" that we inhabit the one true world.

|

| Artistic impression of a space-time twist in a crystal(Credit: Oxford University) |

Taking the idea to the extreme, some even blame the election of Trump and the unprecedented Best Picture mixup at this year's Oscars on malicious higher beings deliberately messing with our virtual world, like bored Sim City players.

Gravitational anomalies

While it sounds like a fun thought experiment that's impossible to verify, researchers at Oxford and Hebrew University may now have proven that the universe is far too complex to simulate. The key is a quantum phenomenon known as the thermal Hall conductance – in other words, a gravitational anomaly.

These anomalies have been known to exist for decades, but are notoriously difficult to directly detect. Effectively representing twists in spacetime, they arise in physical systems where magnetic fields generate energy currents that cut across temperature gradients, particularly in cases where high magnetic fields and very low temperatures are involved.

Quantum simulations

Monte-Carlo simulations are used in a wide variety of fields, from finance to manufacturing to research, to assess the risks and likely outcomes of a given situation. They can process a huge range of factors at once and simulate the most extreme best- and worst-case scenarios, as well as all possibilities in between.

Quantum Monte-Carlo simulations are used to model quantum systems, but the Oxford and Hebrew scientists found that quantum systems containing gravitational anomalies are far too complex to ever be simulated. The quantities involved in the simulation will acquire a negative sign – essentially, there's an infinite number of possibilities, so the simulation can't possibly consider them all.

Pushing it further, the team says that as a simulated system gets more complex, the computational resources – processors, memory, etc – required to run it need to advance at the same rate. That rate might be linear, meaning that every time the number of particles simulated is doubled, the required resources also double. Or it could be exponential, meaning that those resources have to double every time a single new particle is added to the system.

That means that simulating just a few hundred electrons would require a computer with a memory made up of more atoms than the universe contains. Considering our universe contains 1080 particles – that's a 10 followed by 80 zeroes – the number of atoms needed to simulate that is incomprehensible and utterly unsolvable.

"Our work provides an intriguing link between two seemingly unrelated topics: gravitational anomalies and computational complexity," says Zohar Ringel, co-author of the paper. "It also shows that the thermal Hall conductance is a genuine quantum effect: one for which no local classical analogue exists."

The research was published in the journal Science Advances.

Source: Oxford University via Eurekalert - newatlas.com